Aros is a collaborative Project for the course AIxDesign. Because of the growing importance of AI in design fields such as graphic design and 3D animation, I chose this course to explore the possibilities of generative AI and how it’s starting to influence the creative industries.

The course started with different research projects, each focused on innovation, speculation, and critical thinking around current megatrends.

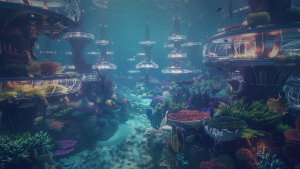

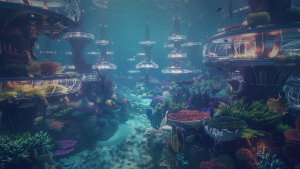

From there, we decided to focus on AROS – a innovative underwater habitat that explores the future of human life in isolated environments. In order to visualise this future-szenario, we created a Virtual Reality Experience, using only AI tools to test both the creative potential and the current limitations of generative AI in 3D production.

We used Unity as our development platform and tested it on the Meta Quest 3 and 3S.

SoSe 2025

Luka Sandvoss, Jacqueline Lehmann, Elisabeth Schenk

Megatrend: Urbanisation

In the face of rapid urbanisation, climate change and rising sea levels, Aros addresses the urgent need to reconsider how and where we live. It translates global challenges into a potential future scenario powered by emerging technologies such as solar energy, aquaponics, and circular architecture.

Rather than viewing underwater habitats as mere technical solutions, Aros considers them to be cultural statements that raise new questions around sustainability, equity and belonging.

What is AROS?

AROS was one of the first underwater habitats to be constructed in response to growing crises on the surface of the planet. Designed as a sanctuary for humanity, the station comprised sleek, circular modules of reinforced glass and metal that were engineered to house a variety of functions.

But life in Aros was fragile. Now, Aros lies silent, with abandoned games and flickering terminals, and desperate messages scrawled on the walls.

The Experience

The exploration begins in an entry room with a compact, clean and positive atmosphere, where posters of the research station hang on the walls to introduce the player to its former atmosphere. From there, players move through a long glass tunnel offering a wide, immersive view of the surrounding ocean, complete with coral reefs and reflections of light on the metal walls. The tunnel leads directly into the communal area. A large panoramic window provides a breathtakingly haunting view of the deep ocean.

Scattered throughout the room are signs of past life: worn sofas, children’s toys, scattered papers and clothes, and empty alcohol bottles. The advertisement posters of Aros are torn, there is graffiti on the walls, and some of the windows are cracked. These environmental details suggest a time of human presence, now replaced by abandonment.

From the common room, several tunnels branch off to other parts of the station. One is marked by flickering ‘EXIT’ signs, hinting at evacuation or escape routes.

The app offers a visual exploration of the potential and limitations of AI-generated 3D assets. Certain objects throughout the environment can be interacted with, and these objects float gently to distinguish them from static elements. Each object carries a unique narrative that is triggered when the player interacts with it, allowing them to listen to audio stories and uncover fragments of the station’s history.

The Process

Our pipeline is visualized in the workflow chart. We used ChatGPT for text and concept generation, DeepL Write for text refinement and translation, Midjourney for image generation, Veo 3 for video generation, Adobe Photoshop for image refinement, ElevenLabs for sound and voice generation, and MeshyAI for 3D asset creation and animation.

Logo

The AROS logo features a modern, minimalist wordmark with geometric sans-serif typography. The stylized open “A” and cut “R” introduce individuality, while the smooth curves of the “O” and “S” add balance and elegance. With its thin strokes and spacious kerning, the logo conveys sophistication, calmness, and futuristic precision. The overall design suggests technology, clarity, and a calm, forward-thinking identity — fitting for a sci-fi or underwater research project.

Naming

The naming process for AROS was driven by the idea that one of its key aspects is its location beneath water, rather than beneath the sky.

Eventually, we chose the Japanese word “Sora” (meaning sky) and reversed it to create “Aros.” “Aros” also exists as a word in Old Norse, meaning “river mouth.” – a symbolic image for transitions and connection between ecosystems, technologies and habitats. It perfectly captured the project’s vision.

2D Assets

The 2D assets were, on one hand, an experiment for us to explore image and poster generation using Midjourney and ChatGPT, but they also served as a way to convey the visual identity and atmosphere of Aros in a simple and impactful manner. Each poster highlights different aspects of the utopia.

2D Animation

For the Animation of the Logo, we used Renderforest for initial inspiration and then asked ChatGPT to write a prompt for Veo3, describing soft underwater light rays and a minimal, smooth logo reveal. The result is a calm, atmospheric animation that fits the futuristic tone of AROS.

Coding

Coding was largely supported by AI, which handled many tasks and saved us a significant amount of work. Simple logic scripts worked surprisingly well, and ChatGPT could take over much of the basic functionality.

However, more complex, interdependent scripts and cross-referencing functions proved challenging. Setting up the VR application itself was also not straightforward, and integrating everything into the XR Meta Stack caused issues in several places.

Because of these limitations, having a fundamental understanding of coding remained essential to solving problems and ensuring the project worked as intended.

Sound

To help players understand the storyline, information is delivered primarily through sound—both from the surrounding environment and during interactions.

The narrative segments were created with ChatGPT and brought to life using ElevenLabs for voiceovers. Complementary sound effects were also crafted to enhance immersion and deepen the storytelling experience.

Using Unity’s position-based audio, we added subtle water sounds and faint elevator-like background music to strengthen the feeling of being inside an underwater facility while supporting the overall dystopian atmosphere.

3D Assets

Artificial intelligence is rapidly transforming 3D modeling, making asset creation faster and more accessible than ever before. The current leaders in AI-driven 3D generation are Meshy AI, Tencent Hunyuan3D, 3D AI Studio, Tripo AI, and Spline, each offering unique strengths that make them stand out in the rapidly evolving landscape of 3D asset creation.

You can find out more about Meshy AI in our documentation.

Meshy AI, launched in 2023, is a browser-based AI platform that enables users to generate 3D assets from text prompts or reference images.

Meshy AI offers an extensive and diverse asset library made up of 3D models generated by other users. All of these assets are freely available to download and can be used in projects. or our project, we aimed to make the most of this resource by incorporating as many of these community-created assets as possible.

While assets from the asset library cannot be modified or customized, generating your own model offers more flexibility. You can adjust various aspects such as polygon density, choose between quads or triangles, perform rough remeshing, retexture models, and adapt the overall style.

For assets that needed to match a specific style, such as walls, windows, or other architectural elements, we used ChatGPT to create concept art, which then served as reference images for Meshy AI.

However, we encountered several issues during the export process, particularly with texturing.

Some models, such as the bar, shelves and lamp, were exported with only one flat base colour instead of the fully detailed textures we had originally designed.

Additionally, certain meshes lacked structural consistency, for example, the door.

We also experimented with object animation. The original idea was to have fish gently swimming around the facility to bring the environment to life. However, MeshyAI currently only supports animating humanoid models in a T- or A-pose. To test this feature, we used a garden gnome as a placeholder. The animation and rigging process itself was surprisingly quick and straightforward, and the results were easy to import.

However, the animations weren’t flawless. We observed unnatural joint movements, overlapping meshes, and visible mesh distortions. These issues became particularly noticeable during larger motion s, highlighting the current limitations of MeshyAI’s automated rigging and animation pipeline.

Current Issues

Topology

The models are overly dense, using far more polygons than necessary—often with irregular and uneven distribution. Instead of using clean quads, the geometry relies on triangles, which disrupts edge flow and causes problems in deformation during animation.

In real-time engines like Unity or Unreal, the excessive geometry also increases rendering costs and can lead to performance drops.

Textures

Textures are often blurred or low in detail due to limited resolution and lack of physically based rendering logic. Materials such as glass may lack transparency and appear solid because the texture generation does not simulate light transmission or refraction.

Highlights and reflections are sometimes baked into the texture rather than dynamically rendered, which can cause inconsistencies when lighting changes in the scene.

UV Maps

The UV maps often contain overlapping islands, inconsistent scaling, and irregular seam placement. These issues result from the AI’s lack of structured unwrapping logic or awareness of surface flow. Misaligned UVs can lead to texture stretching, distortion, and artifacts, especially on curved or complex surfaces. This affects how textures and shaders are projected and can lead to errors during export, which are often difficult and time-consuming to manually correct.

Structual & Semantic Errors

AI-generated 3D models often lack true object logic, relying on pattern recognition rather than understanding structure and function. This leads to issues like incorrect anatomy, floating parts, broken symmetry, and non-functional designs. These flaws frequently cause further problems during rigging and animation.

As a result, the errors reduce realism and usability, requiring manual cleanup.

This could also interest you:)

Schattenmaler

University – Project 1

Fractal

University – Projekt 2

AROS

University, AIxDesign – VR Experience

Schattenmaler

University – Project 1

Fractal

University – Projekt 2

AROS

University, AIxDesign – VR Experience